A look back on 2025

So here we are, the start of another year. The 26th year of the century. Another year older, another year writing the blog, another year ahead.

In this post I want to take a look back at 2025 and what happened there.

Simon continues flying gliders

This year I’ve been working towards the CAA Sailplane license and I’m nearly there. I’m waiting for the weather to improve and my tight turns to be a bit better before I sit the exam.

I bought an LS4, which is hangared at Denbigh, which I fly regularly.

Here she is in her hangar:

She’s a beautiful machine!!

The plan in 2026 is to get the Sailplane license and start doing some cross country flights.

You can watch my general exploits and hooliganism on Soar with Simon.

My Career Goal has been met

Way back when, some 22 years ago, I started a job in Manchester as a junior programmer. It was based in Didsbury and it was a pain to get to. Two trains, both going through Manchester. When I first boarded that train, all those years ago, I wanted nothing more than to be a professional programmer.

I didn’t know it at the time, but that job would take up 20 years of my life. When I started I was what today would be called a Founding Engineer, the person who wrote a lot of the original code of the original platform. The job evolved and grew, the company got bought out and I ended being the Director of Engineering for their new SaaS product.

Lots of changes in leadership led to me ultimately leaving the company in December 2025. At the start of 2025, so I was on the hunt for a new role. It left a bit of a sour taste - the last year there was terrible - and it did feel like there was unfinished business.

Over the decades I was at MPP Global, my career aspirations grew and I wanted to to the top job of Chief Technology Officer. I always said that my previous company would be a bad place to be CTO. It was a business with a lot of complexity, multiple overseas operations and lots of aged technology and not really enough revenue to properly own and operate that complexity.

While I was in my final years there, I went and did the CTO Programme at Cambridge University to get a suitable qualification and continued to work on my skills.

This year I got a new job and finally got promoted to the top role of CTO. That’s the end of a project lasting decades.

I’ve enjoyed the new role but it’s been tense with its fair share of twists and turns. But it feels good to actually get there. Get the title, attending the board meetings and genuinely trying to help the company succeed.

With it being a small investor backed company, there is a risk it goes out of business in 2026. It’s a risk I’m willing to live with.

When I left my previous role in 2024, it felt pretty terrifying after being in work for so long. Now I have the experience of facing that reality and it scares me a lot less than it did in the past.

Another year without alcohol

Early in 2026, it’ll be four years since I last had a drink of any alcohol. Not much to say here other than that the other things I’m doing in life, like flying, the open university etc wouldn’t have been possible without cutting down and ultimately eliminating drinking.

Not to mention the health benefits and the genuinely happier disposition I have these days for having given it up.

Another year of cycling

When I got my new job, I decided to buy a bike that I’d keep at work. This turned out to be a pretty good decision. I got myself a single speed so that that there wouldn’t be much maintenance.

I ride the bike each lunch time as a way to get around Birchwood.

Here she is locked up at Manchester before we moved the offices earlier this year:

I’ve always enjoyed cycling, it’s a mixture of exercise and therapy really.

When I’m cycling at home I have a couple of routes that take me past Spike Island, which is always a good place to get some pictures.

Here is one taken in May of 2025:

And here is one taken in December:

People say a lot of bad things about the North West, but if you have the weather it’s as good as anywhere else.

Another year on the Open University Maths Degree

I now enter the third calendar year at Open University. I’m now on the fourth module of the degree and the last of level 1.

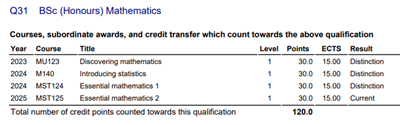

I completed MST124 in June of 2025 and obtained a distinction. My current academic record looks like this:

Obviously, the goal for MST125 is to try and get another distinction, making it four distinctions back to back. While this is the goal, it is becoming harder as the material is getting more challenging with every passing unit.

Once MST125 is completed, I can claim my Certificate of Higher Education. This would be my first formal qualification from the programme. They’re not graded so all it says is that you have reached the minimum standard. It’s still worth having though.

I expect to get the news on that in the middle of July, 2026. Then onwards and upwards to level 2. Another three years of study will get me to the Diploma of Higher Education. It’s a long road for sure.

Personal programming projects

I’ve done more personal programming this year than I’ve done in previous years. I think that’s because I’ve got more head space than I’ve had in previous years.

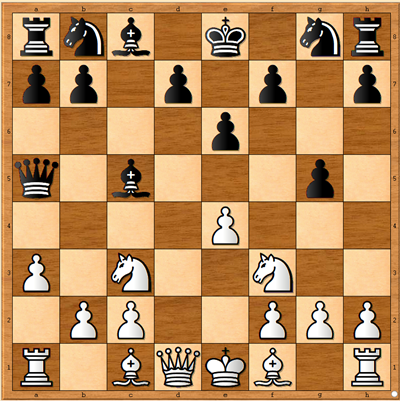

I spent the last three months of the year looking at building my own chess engine. It was smart enough to trap me in the following position:

I was playing white and I moved the pawn from B2 to B4, however, you’ll notice that the the pawn on B3 is actually pinned by the queen. So moving that pawn to retake would lose the rook.

When I played the pawn to B4 and the engine captured with bishop to B4, I sounded like Robert Muldoon in Jurassic Park where he says “Clever Girl” to the Velociraptor before being eaten ![]()

Conclusions and thought for 2026

Now I’ve taken time to write all that out, I’ve had a pretty active 2025 really. I want to take the same energy in to 2026.

I have some ideas for what I want to tackle in 2026:

- Programming

- I want to write a basic MUD.

- I’ve wanted to do that project for a while and never gotten around to it. It’d be nice to have a community of even just 20 people to play with really. Have a discord, have a few areas, etc etc.

- I want to write a basic MUD.

- Education

- I want to complete MST125.

- Stretch goal: Get a distinction.

- I want to start a level 2 module.

- I want to complete MST125.

- Flying

- Get my Sailplane license.

- Do a cross country of at least 50 kilometers.

Let’s see how much progress I make this year!